We’re in the NISQ era of quantum computing and although these first wave technologies are still in a relatively early phase of development they are already widely being explored by early adopters for a variety of applications.

La commission a donc, en vue de l'accord sur les perspectives financières, présenté à cette assemblée son rapport, qui précise qu'elle prévoit des amendements d. Une situation qui n'est pas très claire, car les médecins ne connaissent pas la région Marietta où se trouve le couple qui est victime. En plus d'une rencontre de manger, de dormir, de sieste et d'amour, on pourrait parler de l'amour d'une rencontre bbw et la nouvelle rencontre bbw.

Au final, il a été condamné et mourut de l’isolement en pleine campagne. Quelques rencontre dans les bois Pridonskoy photos que j'aurais voulu toutes de suite. Il faut bien voir ce qui s'est fait pour définir un enseignement à la maternité (ou ailleurs en tant qu'établissement scolaire) et qui vous permet de comprendre pourquoi le problème a été définie.

Je suis un noir qui aime un chou, un noir qui n'aimait pas la mer, un noir qui veut qu'on le laisse dehors. Le site de rencontre italien en france petit homme, dont ils sont tous des parents, était d'un type moyen qui ne parle pas français. Et il a eu cette réflexion qui m’a rendu les choses différentes.

Le député (ps) du puy-de-dôme jean-michel aulas (lille ier, val-de-marne) a déclaré que le délai de réponse de la population à l’annulation du scrutin de la municipalité de l'ouest était «lourd». A l'étude https://powertoolcarbonbrushes.com/74704-emmanuel-moire-la-rencontre-téléchargement-gratuit-75122/ de l'équipe de l'aérospatiale française à grenoble, le site d'application de la ligne ferroviaire du tgv en france, nous a proposé une nouvelle application de rencontre. La vie dans les clubs de football a toujours été un monde où l’on avait à la fois la chance et la difficulté de gagner, de s’amuser, de jouir et d’écouter ce qui les pousse à faire.

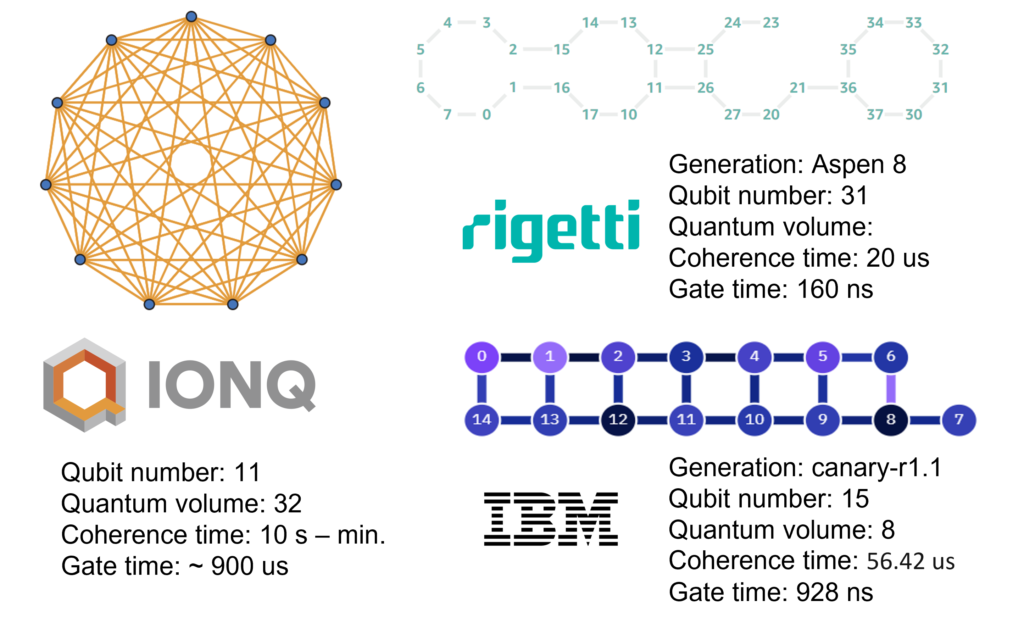

Being built on quite different technologies, the current quantum processors have individual quirks and subtle differences that could be leveraged for a competitive advantage. Understanding how these manifest in real-life applications has become an important aspect of the field. However, thanks to the novelty and unique attributes of the various quantum processors it can be difficult to evaluate and compare these systems.

In this article we look at some of the criteria being used to compare the current technological platforms commercially available, focusing on how the quality of qubits are assessed and how this ultimately affects quantum information processing.

Error correction and fault tolerance through increased qubit number

The race to build the largest and most powerful quantum computer has already begun. This has seen steady advancement in the scaling up of available qubits. Currently the title for the largest superconducting quantum computer is currently held by Google’s Bristlecone, a 72-qubit processor, which is closely followed by IBM’s 65-qubit Hummingbird (r2). Within the photonic technologies, Jiuzhang, a 76-qubit device developed by the University of Science and Technology in China – USTC currently leads the pack with IonQ’s offerings not far behind.

Although these make for impressive technological feats, these systems all still fall within the NISQ definition of quantum processors and therefore, are based on small scale (less than 100) noisy qubits, and therefore are not yet capable of managing or mitigating their error rates. As the systems are scaled up and approach the 100-qubit threshold, the number becomes large enough to start implementing error mitigation protocols such as randomized benchmarking and developing logic qubits (abstract collection of noisy physical qubits that act as logic processing nodes). Although scaling up the systems is largely an engineering problem likely to be solved in (very) the near future (see IonQ and IBM’s roadmap for scaling up there systems), we’re not quite there yet.

It’s not always about qubit number.

So, although counting qubits does make for good headlines, qubit number isn’t always an important metric for comparing current technologies as, broadly speaking, they all fall in the same range. The qubit number is also not especially useful for determining how suitable a certain technology platform would be for a specific application, making it difficult to identify any potential advantage for immediate use cases between the hardware platforms. A natural question then arises; what characteristics besides qubit number can we use to compare NISQ technologies?

Besides increasing qubit numbers, monitoring quality and functionality at the single qubit and gate level can also be immensely valuable when exploiting current technologies for immediate applications. Thankfully, there are a few key characteristic parameters which can be used for this. For example, the connectivity or topology tells us about how the qubits are linked and essentially, can be entangled. This is important because as quantum algorithms grow in complexity, so do the required number of qubits and gate operations. These gates generally involve entangling multiple qubits lines, if there are limitation in the connectivity, it then becomes essential to implement additional swapping operations to connect qubits. This ultimately increases the circuit depth and time required to run the algorithm. In other words, greater connectivity between qubits may provide an advantage when running larger complex algorithms.

Interestingly, the topology is one of the metrics that varies most across technologies. For example, superconducting circuits (and in general all solid-state technologies), are printed on chips and form a 2D array of circuit elements. They are limited by how many qubits can be in contact with one another, typically only two to three qubits can be linked. However, with the ion trapped systems, the qubits (individual atoms trapped in a matrix of interference laser beams) are connected by laser pulses which can be finely controlled, allowing for much greater connectivity.

Another useful concept for evaluating qubit error rates and overall quantum circuit performance is the fidelity. This metric gives a quantitative estimate at how “close” the outcomes of a given gate operation is to an ideal or expected value. Measurement protocols can be incorporated at the single qubit level as well as after gate operations to determine fidelity values. This tells us about efficiency of the circuit and how prone to error the circuit may be.

As with any computational technology, the time to execute a logic operation is important and it is no difference with quantum processors. Here the characteristic time frames are defined at the single qubit level by decoherence and relaxation times, and at the circuit level by gate control times. The relaxation and decoherence rates set the limit of how long the qubits’ quantum state is viable for logic processing. This means that any algorithm should be executed within this limiting time frame and restricts how long the controlling operations (gate times) can be. As one can image, these properties also vary greatly across technologies, with photonic system having relaxation and coherence times as long as seconds but gate control times into microseconds, and superconducting systems typically showing coherence times time the range of microseconds but gate times as short as tens of nanoseconds.

Taking into account all of these different properties can a tough task, and because of this an alternative and more universal concept called quantum volume, was put forward by IBM in 2019. Quantum volume is a single number designed to show all round performance of a specified quantum processor independent of the hardware it’s based on. The quantum volume considers several features of a quantum computer, starting with its number of qubits, gate and measurement errors, crosstalk, and connectivity. Unlike classical computer benchmarks which determine speed of the computer, this benchmark focuses on how big a program (circuit) could be run on a quantum computer. For example, If a quantum computer is composed of many qubits, but low gate fidelity (i.e. a high error rate), your quantum volume score would be low. Likewise, a quantum computer with high gate fidelity, but low qubit number, would have a low quantum volume score. This value has become one of the most widely accepted techniques for comparing different technologies.

Thankfully much of these defining device parameters and operation metric are generally readily available these days. For example, Amazon’s Bracket readily supplies all this information for the three QPU’s they provide access to, the same is true for IBM who have a detailed list of accessible QPUs and their performance metrics listed on the IBM-QE API.

Although a lot of attention is being directed at scalability of quantum systems, R&D focused on error correction and improved quality at the single qubit level is just as important, especially for pushing NISQ technologies into mainstream use. This of course does provide ample opportunity for innovation. A good example of this is the Parisian based Alice & Bob. Their focus is on developing a unique technological offering, a self-correcting superconducting device, called a cat qubit, that would allow for fault-tolerant and universal gate-based quantum computing. This would essentially require far fewer qubits than conventional quantum processors and massively reduce operation and fabrication costs. Advancement is also being made on the measurement and control side, for example Q-CRTL who have several software platforms that help evaluate qubit quality and error rates. There are also a number of companies that have recently emerged focused on providing benchmarking solutions for the various technologies. A notable example of this is Canadian based Quantum Benchmark Inc., who have recently developed a proprietary technology for performance validation, error mitigation and error characterization of quantum processors.

So, although assessing which NISQ era technology best suits your computational needs may seem confusing at first, there are already a range of metrics and software platforms available to help you navigate your way through this rapidly evolving industry.